Apple's Genius Move to Supercharge Your Phone's AI!

Have you ever wished your phone could understand you better or give smarter responses? That dream is getting closer to reality thanks to some new AI research from Apple.

In a recently published paper, Apple researchers outlined a clever way to run more advanced artificial intelligence systems on phones, even ones with limited memory. This could allow next-generation AI assistants, like Siri 2.0, to have more detailed conversations and provide more helpful information.

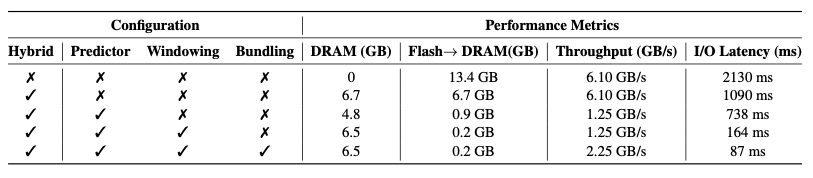

The key innovation is finding a way to store complex AI models using a phone's flash memory rather than RAM. RAM is faster but more limited, while flash can hold much more data.

The researchers came up with smart techniques to only load small chunks of the AI model into RAM as needed, instead of loading the whole thing. For example, they reuse recent data instead of re-loading it each time.

Their methods allow an AI system twice as big as the available RAM to run efficiently on a phone. Transferring data from flash memory was sped up by 4-5 times compared to a simpler approach.

Image credit - Alizadeh, K., Mirzadeh, I., Belenko, D. et al. LLM in a flash: Efficient Large Language Model Inference with Limited Memory. arXiv preprint arXiv:2312.11514 (2023).

What does this mean in practical terms? It paves the way for more advanced AI algorithms, like those behind chatbots, voice assistants and automatically generated images, to work on phones. The extra AI power will enable apps to be more conversational, creative and intuitive.

The researchers tested their techniques on AI models with billions of parameters, showing they could run impressively fast while fitting into a phone's memory constraints.

While not a magic bullet, Apple's ingenious use of flash memory overcomes a major barrier to getting the latest AI advances on mobile devices. Once these more powerful AI systems can run efficiently on phones, it opens up possibilities for new intelligent features and functionality.

Key Takeaways:

- Apple researchers found a way to store complex AI models using a phone's flash memory instead of limited RAM

- Their techniques involve intelligently loading only parts of the model into RAM as needed

- This enables advanced AI with 2x as many parameters to run efficiently on phones with 4-5x faster speed

- It opens the door for more advanced AI apps and features like conversational assistants on phones

- While not instantaneous, this approach overcomes a major technical challenge to getting more powerful AI on mobile devices

So while you may need to wait a bit longer, someday your phone could have an AI assistant that converses as naturally as a human! Apple's work suggests the technology is moving briskly in that direction.

This blog post summarises key points from the research paper 'LLM in a flash: Efficient Large Language Model Inference with Limited Memory' by Alizadeh et al. (2023). Full credit goes to the authors for their work developing these AI techniques.

Check out our list of over 1,000 custom GPTs. We’ve cherry-picked the best ones, you can see them here

Looking for prompts? We have the world's best prompts in our prompt database here

Need inspiration on your next creative project with a text-to-image model? Check out our image prompt database here

Catch our other blogs here