Code Prompting: A New Horizon in AI’s Reasoning Capabilities

Conditional reasoning is a fundamental aspect of intelligence, both in humans and artificial intelligence systems. It’s the process of making decisions or drawing conclusions based on specific conditions or premises. In our daily lives, we often use conditional reasoning without even realizing it. For example, deciding whether to take an umbrella depends on the condition of the weather forecast. Similarly, artificial intelligence (AI), particularly large language models (LLMs), also attempts to mimic this essential human ability.

While LLMs like GPT-3.5 have demonstrated remarkable capabilities in various natural language processing tasks, their prowess in conditional reasoning has been somewhat limited and less explored. This is where a new research paper comes into play, introducing an innovative approach known as “code prompting” to enhance conditional reasoning in LLMs trained on both text and code.

The Concept of Code Prompting

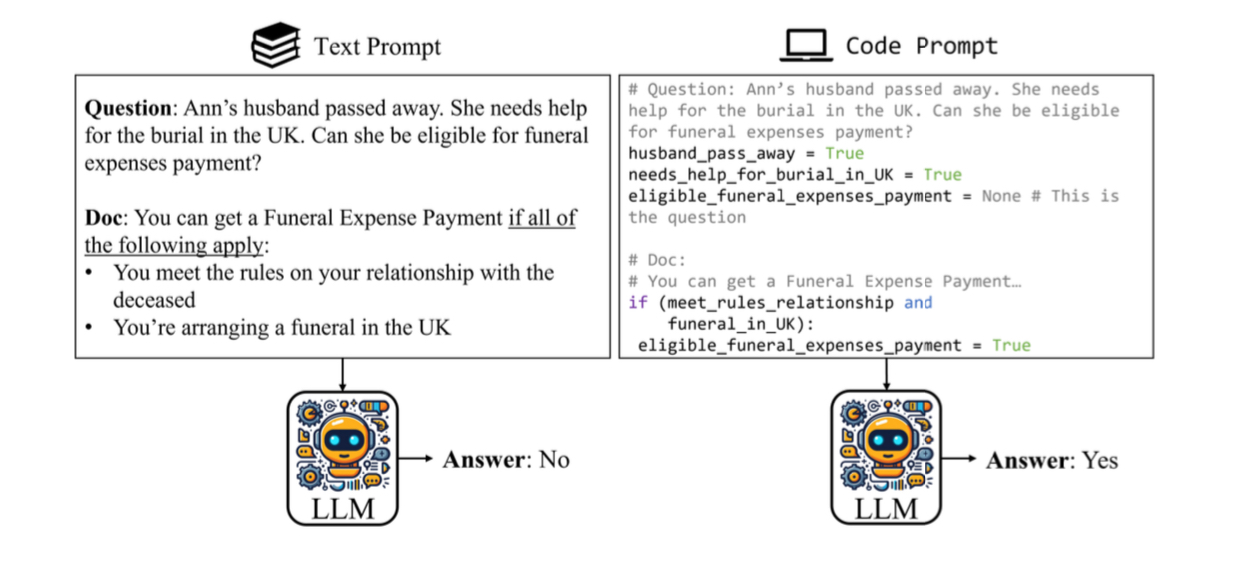

Image Source: Puerto, H., Tutek, M., Aditya, S., Zhu, X., & Gurevych, I. (2024). Code Prompting Elicits Conditional Reasoning Abilities in Text+Code LLMs. arXiv preprint arXiv:2401.10065.

Code prompting is an intriguing technique where a natural language problem is transformed into code before it’s presented to the LLM. This code isn’t just a jumble of commands and syntax; it thoughtfully retains the original text as comments, essentially embedding the textual logic within the code’s structure. This approach is revolutionary in how it leverages the strengths of LLMs trained on both text and code, potentially unlocking new levels of reasoning capabilities.

Testing and Results: A Leap Forward in Conditional Reasoning

To evaluate the effectiveness of code prompting, the researchers conducted experiments using two conditional reasoning QA datasets - ConditionalQA and BoardgameQA. The results were noteworthy. Code prompting consistently outperformed regular text prompting, marking improvements ranging from 2.6 to 7.7 points. Such a significant leap forward clearly indicates the potential of code prompting in enhancing the conditional reasoning abilities of LLMs.

An essential aspect of these experiments was the ablation studies. These studies confirmed that the performance gains were indeed due to the code format and not just a byproduct of text simplification during the transformation process.

Deeper Insights from the Research

The research provided some critical insights into why code prompting works effectively:

- Efficiency in Learning: Code prompts proved to be more sample efficient, requiring fewer examples to demonstrate and learn.

- Importance of Retaining Original Text: Keeping the original natural language text as comments in the code was crucial. The performance dropped significantly without this element, highlighting the importance of context retention.

- Semantics Matter: The semantics of the code needed to closely mirror the original text. Random or irrelevant code structures did not yield the same results, underscoring the need for a logical representation of the text’s logic in code form.

- Superior State Tracking: One of the most significant advantages of code prompts was their ability to track the state of key entities or variables more effectively. This ability is particularly useful in complex reasoning tasks involving multiple steps or conditions.

Concluding Thoughts: The Future of Reasoning in AI

The implications of this study are vast for the development of AI, especially in enhancing reasoning abilities in LLMs. Code prompting emerges not just as a technique but as a potential cornerstone in the evolution of AI reasoning. It underscores the importance of not just exposing models to code but doing so in a manner that closely aligns with the original textual logic.

Key Takeaways:

- Converting text problems into code can significantly enhance reasoning abilities in models trained on both text and code.

- The format and semantics of the code are crucial; it’s not just about the exposure to code but its meaningful integration with the text.

- Efficiency and improved state tracking are two major benefits of code prompts.

- Retaining original natural language text within the code is essential for context understanding.

While this research opens new doors in AI reasoning, it also paves the way for further exploration. Could this technique be adapted to improve other forms of reasoning? How might it evolve with advancements in AI models? These are questions that beckon.

Looking for prompts? We have the world's best prompts here.

Want more blogs? Find more here.

Full credit for the original research: Puerto, H., Tutek, M., Aditya, S., Zhu, X., & Gurevych, I. "Code Prompting Elicits Conditional Reasoning Abilities in Text+Code LLMs." arXiv preprint arXiv:2401.10065 (2024).

About the Author

Stephen is the founder of The Prompt Index, the #1 AI resource platform. With a background in sales, data analysis, and artificial intelligence, Stephen has successfully leveraged AI in order to build a free platform that helps others integrate artificial intelligence into their lives. Connect with him on LinkedIn or Telegram.