Turning Natural Language into SQL: A Data-Centric, Multi-Model Pipeline That Teaches Machines to Query Databases Smarter

If you’ve ever wondered how a computer can translate your everyday question—“Show me all orders from last quarter”—into a precise database query, you’re not alone. Text-to-SQL tasks have been a hotbed of progress as AI models get better at understanding human language and databases at the same time. Yet, most improvements so far have focused on fancy prompts or tiny tweaks to how a model is trained, rather than on the data that feeds the model in the first place. The DCMM-SQL approach takes a different route: it puts data quality, data generation, and multiple model perspectives at the center. The result is a full, automated, data‑centric pipeline that — when combined with a team of models that learn from each other — can produce far more accurate SQL queries than a single model trained in isolation. Here’s a breakdown of what this means, why it matters, and how it all fits together.

What DCMM-SQL is trying to solve

In recent years, three broad paths have been popular for text-to-SQL systems:

- Agent-based frameworks that break the task into components like schema linking, SQL generation, and SQL correction.

- Fine-tuned models specifically trained for text-to-SQL.

- Hybrid approaches that mix agent strategies with fine-tuning.

Researchers have tended to chase better final outputs by refining agents or tweaking training, but data quality remained a stubborn bottleneck. Annotating text-to-SQL data is expensive and time-consuming, and real-world datasets aren’t perfect. DCMM-SQL asks a different question: what if we automate the data lifecycle itself? What if we build a pipeline that automatically diagnoses and repairs data problems, creates useful new data focusing on model weaknesses, and uses multiple models trained on diverse data to complement each other? Add an ensemble stage that chooses the best answer from several candidates, and you’ve got a pipeline that can scale with smaller models while still delivering strong performance.

Two big ideas drive DCMM-SQL:

- Data-centric optimization: automatically repair data, synthesize new data, and augment erroneous cases to push the model to learn from its mistakes.

- Multi-Model collaboration training: train several models on differently augmented data, then let them collaborate (and compete) so their strengths cover each other’s weaknesses. An ensemble then picks the best SQL answer among multiple candidates.

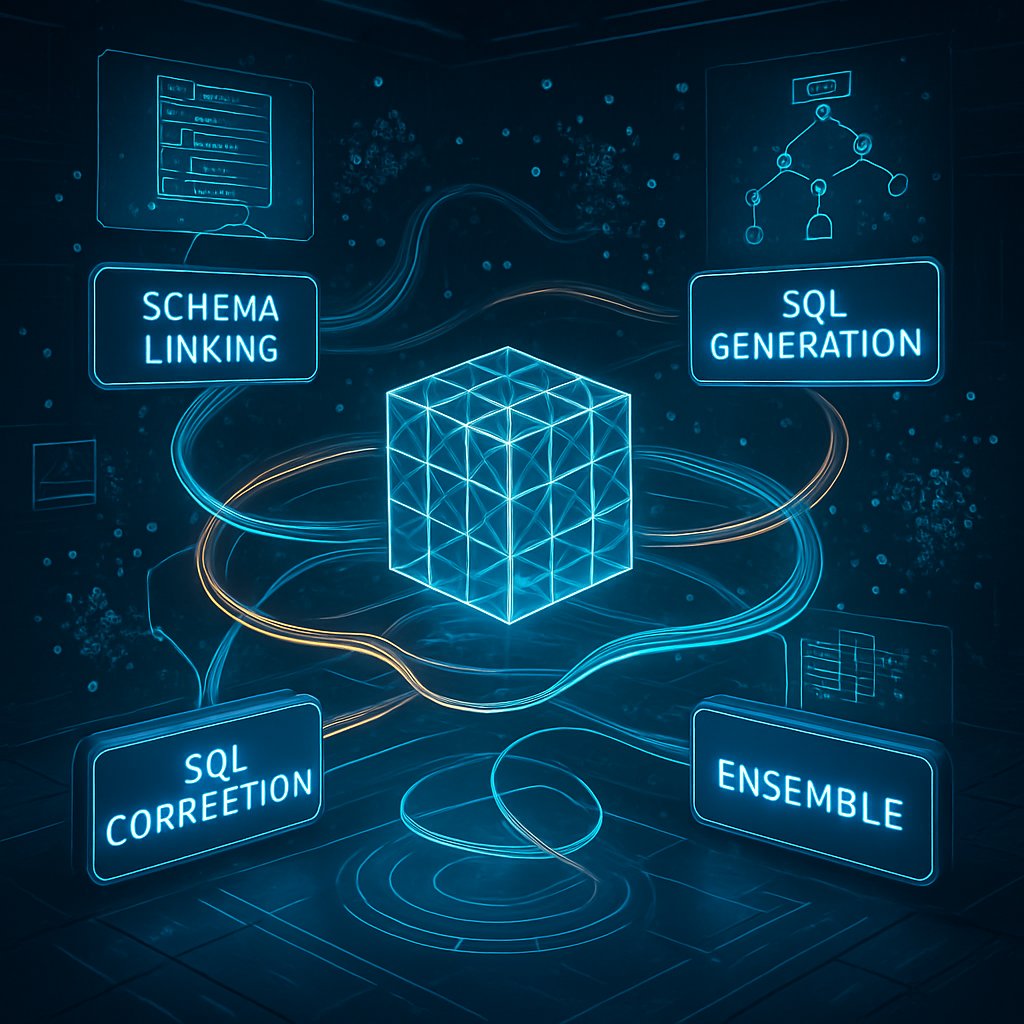

A quick map of the four core modules

DCMM-SQL isn’t a single model; it’s a four-part agent pipeline that works like a factory for converting natural language into SQL. Here’s what happens in practice:

1) Schema Linking

- The database your question refers to may have tons of tables and fields, many of which aren’t relevant. Schema linking is like a smart filter: it sifts through the schema to connect the user’s words to the most relevant tables and columns.

- The authors use a four-step approach:

- Value Match: quick, surface-level matching using a search method called BM25 and simple substring checks.

- First Filter: a trained model (in practice, a BERT-based classifier) scores and narrows down the best tables/fields to keep recall high.

- Extract Keywords: an LLM reads the user’s question to pull out the essential terms.

- Second Filter: the LLM uses those keywords to sharpen the initial filtering, honing in on the right pieces of the schema.

- Why this matters: good schema linking reduces noise and helps the subsequent SQL generation model focus on relevant parts of the database.

2) SQL Generation

- Once the relevant schema is identified, multiple SQL candidates are generated. Instead of relying on a single model, DCMM-SQL trains several SQL generation models with different data strategies. This is the “diverse viewpoints” part of the system.

- The key twist: the system isn’t just churning out SQL with different prompts. It’s training separate models on data that has been repaired, augmented, and diffused to emphasize different weaknesses found in preliminary runs.

- Why this matters: different models can prefer different patterns in data. By having multiple trained models, you create a richer set of candidate queries to choose from later.

3) SQL Correction

- After an SQL candidate is produced, it is checked and refined. Errors come in two flavors:

- Syntax errors (the query doesn’t even run).

- Semantics errors (the query runs but doesn’t answer the question correctly).

- DCMM-SQL uses two steps to correct semantics and syntax:

- Semantic correction: an LLM helps identify and suggest corrections to the underlying meaning of the SQL.

- Syntax correction: the corrected semantic version may still have syntactic issues, so a second pass fixes the syntax, guided by database-error feedback.

- Importantly, this correction isn’t just “one model guesses and fixes.” It’s an information-enhanced process: the system uses external signals (like actual database errors) and structured prompts to improve corrections. The result is a more reliable SQL candidate.

4) Ensemble/Selection

- The final step isn’t about generating more SQL. It’s about picking the best one from a set of strong candidates.

- Instead of a simple voting scheme, DCMM-SQL frames this as a multi-model, multiple-choice problem. It uses reasoning over the candidate set, grouping execution results, and then uses a dedicated selection model to pick the standout option.

- The idea is to avoid the “most confident equals correct” trap and instead use a higher‑level decision process that considers multiple plausible SQLs and their execution behavior.

Two-step training with Multi-Model collaboration

A standout feature of DCMM-SQL is its two-step training approach, designed to take advantage of multiple models and a broader data spectrum.

Step 1: Preliminary Training (SFT)

- The first model, or several models, are fine-tuned on the original data (via supervised fine-tuning, SFT).

- They also use a technique called LoRA (Low-Rank Adaptation) to enable more flexible fine-tuning with smaller parameter changes. In practice, this helps achieve strong performance without needing gigantic models.

Step 2: Active Learning Training with Erroneous Data

- Run the preliminary model on the training data to generate predictions.

- Identify errors (where the model’s SQL doesn’t match the ground truth).

- Create new, targeted data through diffusion/augmentation that specifically emphasizes those weaknesses.

- Train new models on this augmented data, creating Mn models that each bring a different strength to the table.

Why this two-step approach matters: it treats incorrect predictions not as a failure, but as a data signal. By reworking those mistakes into new learning material, the system learns to avoid them in the future.

The data-centric pipeline: repair, verification, and augmentation

Adaptive Data Repair

- The pipeline includes an automatic data repair component. It uses the initial model’s own inferences to re-predict training examples and compare them to the original labeled SQL.

- If the re-predicted SQL is correct according to a validation check, but the original labeled SQL is wrong, that training instance is replaced. This helps fix data quality problems (data contamination) in the Bird and Spider datasets.

- Practically, this led to repairing hundreds of data instances across datasets, which matters when you’re training models that depend on clean supervision.

Error Data Augmentation

- After repair, the algorithm looks at the mistakes the initial model still makes and creates augmented data that reinforces the kind of reasoning the model needs to improve.

- The framework synthesizes additional query-SQL pairs focusing on the exact failure modes. The idea is to diffuse these errors into a richer training mix so later models learn to handle them better.

Query Diffusion vs Example Diffusion

- Query Diffusion: generate semantically similar but differently phrased queries to pair with existing SQLs. This strengthens the model’s robustness to natural language variation.

- Example Diffusion: rewrite an example for different databases or schemas, expanding data coverage across schema variations.

- The surprising takeaway: diffusion based on actual erroneous data (Example Diffusion) tended to outperform diffusion on raw queries. More data isn’t always better if it’s not targeting the right kind of mistakes.

Data Verification: keeping synthetic data honest

To ensure synthetic data isn’t introducing more noise than signal, DCMM-SQL uses a three-pronged verification pipeline:

- Query Check: fluency and similarity checks on synthetic queries (guided by a predictive model).

- SQL Check: syntax validation and execution verification to ensure the SQL actually runs.

- Query-SQL Semantic Consistency Check: three options are used to verify that the SQL actually addresses the query’s intent — zero-shot checks with LLMs, a traditional classifier, or a fine-tuned LLM check model.

Only data that passes these checks is fed back into training. This is crucial to avoid amplifying garbage data.

Curious about the data sources: Bird and Spider

Bird and Spider are two widely used text-to-SQL benchmarks. The DCMM-SQL team tested their data-centric pipeline on both. They also conducted ablation studies to quantify how much each module contributed to the final performance. In short:

- The data-centric pipeline consistently helped across model bases (e.g., smaller 7B and mid-range 32B models, as well as larger 70B-class models).

- The improvement from data repair, augmentation, and verification grew with model size—larger base models benefited more from the data-centric refinements.

- The error-data diffusion strategies, especially when guided by a strong base model for diffusion (e.g., a high-capacity llama or similar), produced the best gains.

A note on performance and comparisons

The DCMM-SQL approach is evaluated using Execution Accuracy (EX), a practical measure of whether the produced SQL yields the correct results when run against a database. The researchers compared DCMM-SQL against a suite of baselines, including both prompt-engineering-based programs and training-based programs.

Key takeaways from the results:

- DCMM-SQL achieved top results among lightweight models (around 70B-scale families) on Bird and Spider benchmarks in their experiments.

- Ablation studies showed that each module (schema linking, data strategies, SQL correction, and the ensemble) contributed about 1–3 percentage points of improvement, underscoring that every piece matters.

- A single integrated model lagged behind the multi-model collaboration approach. The multi-LLM collaboration, coupled with data-driven augmentation and verification, outperformed comparable ensemble setups that didn’t fuse data in the same way.

- When comparing ensemble configurations, DCMM-SQL’s ensemble approach outperformed some other contemporary ensembles in the same space, though the authors acknowledge there’s still room for improvement in the ensemble component.

Real-world implications: why this matters beyond the paper

1) Data quality is the bottleneck—and this work treats data as a first-class citizen

Annotating text-to-SQL datasets is expensive and error-prone. An automated, data-centric pipeline that repairs, verifies, and augments data can dramatically reduce manual effort while pushing models to correct their own weaknesses. For businesses, this could translate into faster iteration cycles when building custom chat interfaces or search tools that rely on natural language queries over a database.

2) Smaller models can punch above their weight

By combining data-centric techniques with multi-model collaboration, DCMM-SQL shows that you don’t necessarily need the biggest models to beat strong baselines. This is especially valuable for organizations that don’t have the compute budgets for gigantic models. The approach provides a blueprint for squeezing more accuracy out of smaller, more accessible models.

3) A blueprint for other tasks

The data synthesis, error diffusion, and verification mechanisms aren’t limited to text-to-SQL. In theory, this data-centric, multi-model approach could be adapted to other structured-prediction tasks where data quality is critical and where multiple model perspectives can complement one another.

Limitations and avenues for refinement

- The ensemble module is powerful but not perfect. The authors acknowledge room for improvement in how the ensemble chooses among candidates. Future work could explore more sophisticated selection criteria, possibly incorporating execution traces or user feedback.

- The pipeline’s success depends on robust data verification signals. If the verification modules misclassify bad data as good, the benefits could fade. Ongoing refinement of the verification prompts and checks will help maintain reliability.

- The approach hinges on having diverse data strategies and multiple models. In practice, organizations will need to balance the cost of training multiple models with the gains in accuracy. The paper’s results are promising for smaller models, but real-world costs vary.

Key takeaways

- Data-first wins: DCMM-SQL demonstrates that automating data repair, synthesis, and augmentation can yield meaningful gains in text-to-SQL tasks, sometimes more reliably than pushing for ever-larger models.

- A four-step agent: Schema Linking, SQL Generation, SQL Correction, and Ensemble form a robust cycle that starts with narrowing the schema, generates multiple SQLs, corrects them with both semantic and syntactic checks, and ends with an informed selection.

- Learn from mistakes: The active learning twist treats model errors as valuable data signals, turning weaknesses into targeted training material.

- Multi-model synergy: Training several models on differently augmented data, then letting them collaborate and be selectively ensembled, provides a smarter way to cover the weaknesses of any single model.

- Realistic performance gains: The approach shows tangible improvements on Bird and Spider benchmarks, with ablation studies confirming the contribution of each module. The results are especially encouraging for lighter-weight models.

Practical tips for applying these ideas

- Start with data quality: If you’re working on a text-to-SQL system, begin with a data-verification loop. Validate synthetic data with multiple checks and keep a close eye on data that passes through all checks.

- Embrace diversity in training data: Train multiple SQL-generation models with different data compositions. Diversity in training data leads to different strengths, which a thoughtful ensemble can combine effectively.

- Treat errors as learning signals: Implement an active-learning flow that turns mistakes into augmented data. This can yield outsized gains without needing dramatically larger models.

- Use a stepwise approach to training: A two-step training regime (preliminary SFT with LoRA, followed by targeted active learning) can be a practical and scalable way to improve performance without excessive compute.

- Measure what matters: Execution accuracy is a practical, end-to-end metric for text-to-SQL systems. It aligns with real-world use where the ultimate test is whether the query returns the correct results.

Final thoughts

DCMM-SQL isn’t just a clever combination of existing tricks. It’s a thoughtful rethinking of how to build text-to-SQL systems: treat data as the main product, design a modular agent that can be trained and improved in stages, and leverage multiple models to cover more ground than any single model could alone. The result is a workflow that not only pushes performance higher but does so with a practical lens—emphasizing data quality, systematic verification, and scalable, collaborative learning.

Key Takeaways

- A data-centric pipeline—comprising adaptive data repair, error data augmentation, and rigorous data verification—can meaningfully improve text-to-SQL performance, especially for smaller models.

- A four-module agent (schema linking, SQL generation, SQL correction, and ensemble) works in concert to produce accurate, executable SQL queries.

- Multi-Model collaboration training, built on a two-step process (preliminary fine-tuning and active learning with augmented error data), helps different models specialize and complement each other.

- Error-focused data diffusion, especially example diffusion based on erroneous data, can yield stronger learning signals than diffusion over clean queries.

- The approach has practical implications for real-world database querying tools, offering a scalable path to higher accuracy without relying solely on the biggest models.